-

Google promised a fix after its photo-categorization software labeled black people as gorillas in 2015. Their “fix” was to censor “gorilla” from their search, as well as “chimp”, “chimpanzee” and “monkey”. That’s how bad the technology is. wired.com/story/when-it-comes-to-gorillas-google-photos-remains-blind/

- …in reply to @axbom

-

…in reply to @axbom

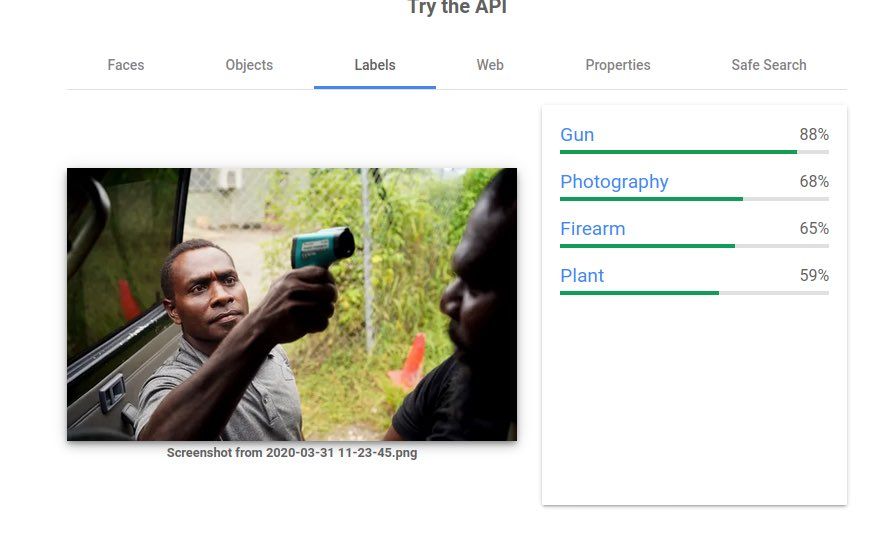

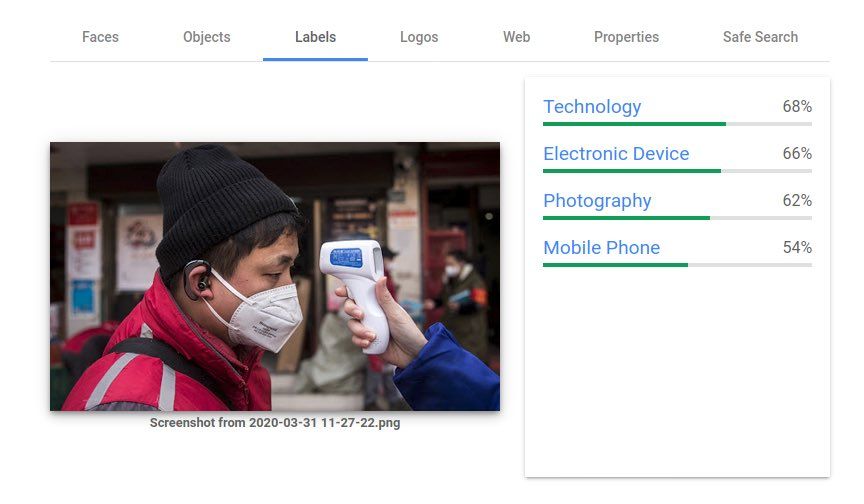

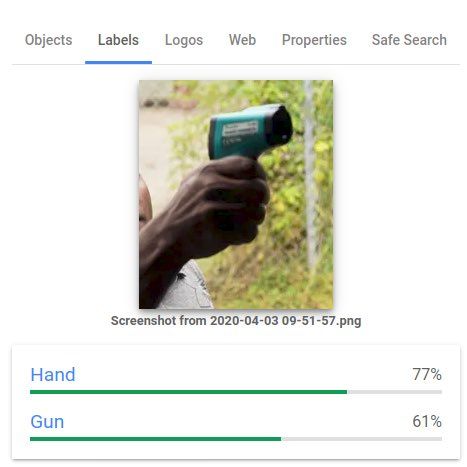

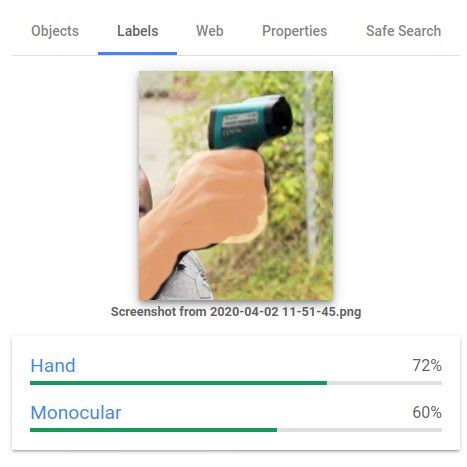

Again, as they always do, Google apologized. algorithmwatch.org/en/google-vision-racism/ “In the absence of more rigorous testing, it is impossible to say that the system is biased” they write. Gee, I wonder who should perform that rigorous testing…

-

…in reply to @axbom

Remember when Amazon proved to have an AI-based recruiting tool that was biased against women. They couldn’t fix that either. Their solution was to scrap it. reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G